Sat Mag

From Kissinger to Trump: A brief history of international relations

By Uditha Devapriya

More than a mere difference of rhetoric sets the US National Defense Strategy of 2008 apart from the updated 2018 version. The Republicans were in control and power at the time of publication of both documents, though the second Bush administration would give way to the Obama presidency a few months after the release of the first. They came against the backdrop of shifting geopolitical concerns: the 2008 brief in the aftermath of Iraq and Afghanistan, the 2018 brief in the aftermath of the ascendancy of China.

It’s not a little significant that the second document devotes less attention than the first to the threat of Islamic extremism. Significant, also, is the point that the 2008 brief lists down two countries as rogue-states (Iran and North Korea), while the 2018 brief adds two more (China and Russia). There you have the main difference: the first emphasises cooperation with allies and competitors, the second recognises the competitors among its enemies. In that sense it’s a more fundamentalist version of George W. Bush coalition of the willing and his bellicose “if you’re not with us you’re with them” jingoism.

The US has been grappling with how best it can advance its interests in the world, and throughout much of the 20th century, particularly during the Cold War, the issue boiled down to the debate between the realists and the idealists. Since the end of the Cold War this debate has been girded by another: between the neoconservatives and the neoliberals. Foreign policy wise, especially as far as countries like Sri Lanka are concerned, there’s very little difference between these groups: both believe in the furtherance of American interests abroad, even if their tactics diverge. The US foreign policy, in other words, differs at the level of theory at home but remains roughly the same in terms of achievement of objectives abroad.

The US has been grappling with how best it can advance its interests in the world, and throughout much of the 20th century, particularly during the Cold War, the issue boiled down to the debate between the realists and the idealists. Since the end of the Cold War this debate has been girded by another: between the neoconservatives and the neoliberals. Foreign policy wise, especially as far as countries like Sri Lanka are concerned, there’s very little difference between these groups: both believe in the furtherance of American interests abroad, even if their tactics diverge. The US foreign policy, in other words, differs at the level of theory at home but remains roughly the same in terms of achievement of objectives abroad.

Is there a point, then, in differentiating between these schools of thought, be they idealist, realist, neoconservative, or neoliberal? I think so, for one cardinal reason.

The cardinal reason is that theory may be grey (as Goethe saw it), but more often than not it is a guide to action (as Marx and Engels saw it). How can we begin to understand a particular administration without understanding the justifications it puts out for interventions in other parts of the world? Policy documents matter because they are adhered to, whether in the breach or the observance. Any student of US foreign policy will tell you that US presidents have resorted to doctrines and communiqués to validate their actions overseas. It is more than intellectual laziness, therefore, to shrug off US administrations as being one and the same. At a fundamental level, to be sure, no difference exists, but fighting one’s way through into the fog, one finds the little details that help distinguish policies from each other.

Take the 2008 and the 2018 National Defense Strategies. Both are premised on the need to preserve America’s interests. But where the 2008 brief lays down five short objectives, the 2018 brief lays down 11 long ones. The first emphasises states as the fundamental unit of the international order (“even though the role of non-state actors in world affairs has increased”), while the second document shifts the emphasis slightly to non-state players. Both view the prevalence of one over another as beneficial to their interests: indeed, the emergence of non-state actors over states is welcomed in the 2018 brief, since “multilateral organizations, non-governmental organizations, corporations, and strategic influencers provide opportunities for collaboration and partnership.” Even if the overarching objective – “Defend the Homeland” in the first brief and “Defend the homeland from attack” in the second – has not altered, US perception of shifting geopolitical realities can, and will, change.

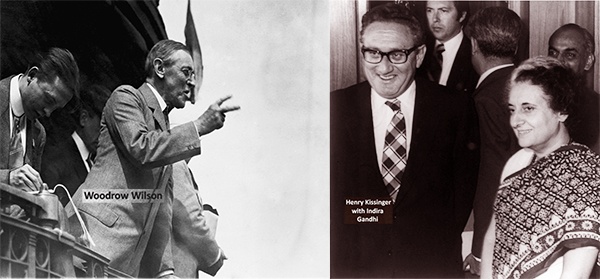

Kissinger was arguably the first American political theorist to underline the need for foreign policy to respond to such changing geopolitical realities. The foremost exponent of realism in international relations of the 20th century, he believed that strategy should be planned and implemented “on the basis of the other side’s capabilities and not merely a calculation of its intentions.” Where the US was going wrong in the Cold War, he opined, was its inability to distinguish between a legitimate order – a rules-based one – and a revolutionary order – one in which countries refuse to accept “either the arrangements of the settlement or the domestic structure of other states.” It all boiled down to power: “relations cannot be conducted without an awareness of power relationships.” Overemphasis on theory, Kissinger added, “can lead to a loss of touch with reality”, and reality can change as much abroad as at home.

Kissinger was arguably the first American political theorist to underline the need for foreign policy to respond to such changing geopolitical realities. The foremost exponent of realism in international relations of the 20th century, he believed that strategy should be planned and implemented “on the basis of the other side’s capabilities and not merely a calculation of its intentions.” Where the US was going wrong in the Cold War, he opined, was its inability to distinguish between a legitimate order – a rules-based one – and a revolutionary order – one in which countries refuse to accept “either the arrangements of the settlement or the domestic structure of other states.” It all boiled down to power: “relations cannot be conducted without an awareness of power relationships.” Overemphasis on theory, Kissinger added, “can lead to a loss of touch with reality”, and reality can change as much abroad as at home.

The shift from idealism to realism transpired in the interwar period. As Europe was to realise to its cost, the Treaty of Versailles, with its assumption that states would cooperate with each other to prop up order and preserve peace, squeezed the middle-classes of Germany and Italy to such an extent that the inevitable outcome had to be fascism on one hand and isolationism on the other. The predominance of idealism in international relations thus ended up producing a backlash against it, as events in the Sudetenland were to prove.

Who were the idealists in America? Woodrow Wilson, mainly. Historians remain divided on whether the Wilsonian League of Nations, which the US never joined, did more to provoke rather than prevent the resumption of war. His insistence on unanimity, even in the face of opposition from Congress, tends to paint him in an unflattering light today. Yet it can also be argued that Wilson’s uncompromising intransigence gave the impetus for his successors to take up the cause of an alliance-driven world order as and when the situation demanded it. In that sense his successor was Franklin Roosevelt, an irony given that the ultimate realist US President prior to World War I had been Roosevelt’s uncle, Theodore.

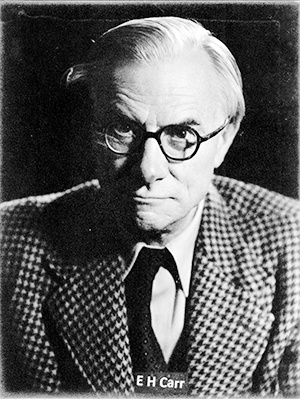

Where Wilson and the League of Nations, to which he put much laudable effort, failed was their patent inability to manage power relationships between nations. As E. H. Carr and Hans Morgenthau were to highlight in their writings, relations exist on the level of nations. Peace cannot be maintained on the basis of an assumption of unity. For such unity to prevail, power must be balanced.

Hence the rude awakening which was World War II propelled the US to embrace realism as the cornerstone of its foreign policy.

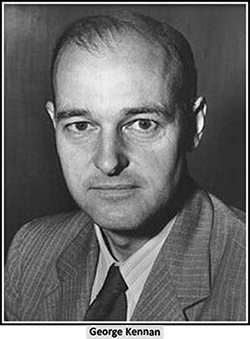

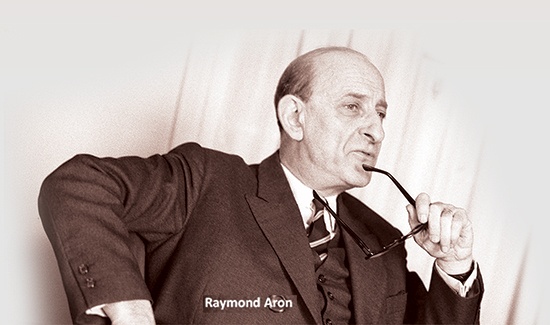

Realism in post-war America (and Western Europe) is typically identified with three political theorists: Kissinger, George Kennan, and Raymond Aron. Their conceptions of realpolitik differed somewhat from one another. Kissinger prided himself on being another Metternich, the 19th century Austrian diplomat who, via an amalgam of cynical power politics and deft negotiation, maintained peace in Europe against the Bonapartist threat by way of Concerts, Alliances, and intrigue. Kennan gained influence as the foremost exponent of containment, arguing that the Soviet threat could be countered through an anticommunist federation in Western Europe; this gradually put him on a collision course with the Truman administration. Aron saw politics as irreducible to morals, yet somehow comes off as more ethnical minded than Kissinger or Kennan; the thesis that the state holds a monopoly over the use of force, he says, does not extend to relations between nations. Thus or Aron, unlike for Kissinger and to a lesser extent for Kennan, the ideal must be liberal democracy. In that sense he is more of a classical realist vis-à-vis Reinhold Niebuhr and Hans Morgenthau.

At any rate, idealism could not win because the War defeated one party and put to power another: the fall of fascism in the Western half of Europe was followed by the resurgence of Communism in the Eastern half. This was a situation palpably different to the status quo in much of the interwar period until the late 1920s. Conflicts could be defused, no matter how clumsily or ineffectually, through temporary alliances forged between one set of nations over another. Post-war America, on the other hand, had to face not just Soviet aggression, but the reality of decolonisation giving way to either Third World alliances with power blocs or the strengthening of Third World neutralism vis-à-vis the Non-Aligned Movement.

Historians and political theorists have not given the emphasis it deserves to the role of the Non-Aligned Movement in defusing tensions between the US and the Soviet Union. Where they touched on nonalignment, US officials were almost always critical: John Foster Dulles called it immoral, to give one example. Kissinger was more dismissive than critical: he took it for granted that given the cultural gulf between the US and the Third World, alliances with the latter were simply not going to work. He identified, however, the basis of the Movement in the undercurrents of tension between the two power blocs: “Nehru’s neutrality is possible,” he crisply noted, “only as long as the United States remains strong.” Once US power went for a six, the Movement would sooner or later come tumbling down.

The point I’m trying to make here is that realpolitik as the cornerstone of US foreign policy could thrive because of the two divisions in the world order at the time: not just between free market capitalism and “godless” communism, but also between alignment and neutrality. The use of proxies from Third World states in the Cold War made it possible for the US (and the Soviets) to projects its dominance, while concurrently pre-empting escalation of war by way of provoking opposition from the nonaligned Third World.

The point I’m trying to make here is that realpolitik as the cornerstone of US foreign policy could thrive because of the two divisions in the world order at the time: not just between free market capitalism and “godless” communism, but also between alignment and neutrality. The use of proxies from Third World states in the Cold War made it possible for the US (and the Soviets) to projects its dominance, while concurrently pre-empting escalation of war by way of provoking opposition from the nonaligned Third World.

Realism, in other words, could not have prevailed without Third World unity, which acted as a bulwark and a dedicated player in the cause of neutrality against interventions by the two superpowers. Kissinger’s claim that the “uncommitted nations” relied in their dealings with the new post-war world order on an “overestimation of the power of words”, and that this beheld them to Soviet antiwar propaganda, is thus reductionist and erroneous; in his assertion that they were incapable of grasping “the full impact of industrialism”, and with it the new order, he was betraying his Orientalist prejudices. No wonder Edward Said devoted space to Kissinger in the opening pages of his book on the subject.

It is not technically correct to say that realism ended with Kissinger, even if as the guiding principle of US foreign policy realpolitik faded away at the tail-end of the 1970s with the rise of neoliberalism and the 1990s with the rise of neoconservatism. But its end had to do with two historical eventualities: the shift from the Old to the New Right in the West, and the slow strangulation of Communism. As I have noted in my essays on the Non-Aligned Movement, with these transformations eroded neutralism’s relevance.

Noam Chomsky is largely right when he argues that the US privileges its interests no matter what the administration is (which is ironic given his cautious embracement of Biden-Harris over Trump-Pence at the US election). But this does not necessarily mean the realist-idealist disjuncture should be ignored altogether. Kissingerian pragmatism has eroded not because the US is the sole superpower, but because it is not: the world since 1995, according to Jessica Matthews (“What Foreign Policy for the US?”, New York Review of Books, September 24, 2015), has presented a conundrum for the formulation of US foreign policy because of five factors: the shift from diplomatic initiative to military power, globalisation, the 9/11 attacks, the growth of China, and Russia’s shift to the East.

It is with these points in mind that we ought to read policy documents from Washington. Taking them with a pinch of salt, with the understanding that there exists a difference only at the tactical and not the strategic level as far as foreign policy formulation between different administrations is concerned, we must nevertheless pay attention to the texts and contexts which colour the rhetoric of these documents.

It is curious that the egoistic ethos of the Trump administration and the analytical rigour of the Bush administration come through in the two strategy documents I outlined earlier: the 2008 policy document ends with a pledge that the Department of Defence “stands ready to fulfil its mission”, while the 2018 one reiterates that very same pledge through the personal assurance of its author, James Mattis. The one does not personalise, the other does nothing but personalise. Yet underlying both is a deeply Manichean view of the world. How the US projects this view through different windows and curtains is the challenge those involved in foreign policy, particularly in countries such as ours, must face and meet.

The writer can be reached at udakdev1@gmail.com

- News Advertiesment

See Kapruka’s top selling online shopping categories such as Toys, Grocery, Flowers, Birthday Cakes, Fruits, Chocolates, Clothing and Electronics. Also see Kapruka’s unique online services such as Money Remittence,News, Courier/Delivery, Food Delivery and over 700 top brands. Also get products from Amazon & Ebay via Kapruka Gloabal Shop into Sri Lanka.

Sat Mag

End of Fukuyama’s last man, and triumph of nationalism

By Uditha Devapriya

What, I wonder, are we to make of nationalism, the most powerful political force we have today? Liberals dream on about its inevitable demise, rehashing a line they’ve been touting since goodness-knows-when. Neoliberals do the same, except their predictions of its demise have less to do with the utopian triumph of universal values than with their undying belief in the disappearance of borders and the interlinking of countries and cities through the gospel of trade. Both are wrong, and grossly so. There is no such thing as a universal value, and even those described as such tend to differ in time and place. There is such a thing as trade, and globalisation has made borders meaningless. But far from making nationalism meaningless, trade and globalisation have in fact bolstered its relevance.

The liberals of the 1990s were (dead) wrong when they foretold the end of history. That is why Francis Fukuyama’s essay reads so much like a wayward prophet’s dream today. And yet, those who quote Fukuyama tend to focus on his millenarian vision of liberal democracy, with its impending triumph across both East and West. This is not all there is to it.

To me what’s interesting about the essay isn’t his thesis about the end of history – whatever that meant – but what, or who, heralds it: Fukuyama’s much ignored “last man.” If we are to talk about how nationalism triumphed over liberal democracy, how populists trumped the end of history, we must talk about this last man, and why he’s so important.

In Fukuyama’s reading of the future, mankind gets together and achieves a state of perfect harmony. Only liberal democracy can galvanise humanity to aspire to and achieve this state, because only liberal democracy can provide everyone enough of a slice of the pie to keep us and them – majority and minority – happy. This is a bourgeois view of humanity, and indeed no less a figure than Marx observed that for the bourgeoisie, the purest political system was the bourgeois republic. In this purest of political systems, this bourgeois republic, Fukuyama sees no necessity for further progression: with freedom of speech, the right to assemble and dissent, an independent judiciary, and separation of powers, human beings get to resolve, if not troubleshoot, all their problems. Consensus, not competition, becomes the order of the day. There can be no forward march; only a turning back.

Yet that future state of affairs suffers from certain convulsions. History is a series of episodic progressions, each aiming at something better and more ideal. If liberal democracy, with its championing of the individual and the free market, triumphs in the end, it must be preceded by the erosion of community life. The problem here is that like all species, humanity tends to congregate, to gather as collectives, as communities.

“[I]n the future,” Fukuyama writes, “we risk becoming secure and self-absorbed last men, devoid of thymotic striving for higher goals in our pursuit of private comforts.” Being secure and self-absorbed, we become trapped in a state of stasis; we think we’re in a Panglossian best of all possible worlds, as though there’s nothing more to achieve.

Fukuyama calls this “megalothymia”, or “the desire to be recognised as greater than other people.” Since human beings think in terms of being better than the rest, the fact of reaching a point where we don’t need to show we’re better lulls us to a sense of restless dissatisfaction. The inevitable follows: some of us try finding out ways of doing something that’ll put us a cut above the rest. In the rush to the top, we end up in “a struggle for recognition.”

Thus the last men of history, in their quest to find some way they can show that they’re superior, run the risk of becoming the first men of history: rampaging, irrational hordes, hell-bent on fighting enemies, at home and abroad, real and imagined.

Fukuyama tries to downplay this risk, contending that liberal democracy provides the best antidote against a return to such a primitive state of nature. And yet even in this purest of political systems, security becomes a priority: to prevent a return to savagery, there must be an adequate deterrent against it. In his scheme of things, two factors prevent history from realising the ideals of humanity, and it is these that make such a deterrent vital: persistent war and persistent inequality. Liberal democracy does not resolve these to the extent of making them irrelevant. Like dregs in a teacup, they refuse to dissolve.

Fukuyama tries to downplay this risk, contending that liberal democracy provides the best antidote against a return to such a primitive state of nature. And yet even in this purest of political systems, security becomes a priority: to prevent a return to savagery, there must be an adequate deterrent against it. In his scheme of things, two factors prevent history from realising the ideals of humanity, and it is these that make such a deterrent vital: persistent war and persistent inequality. Liberal democracy does not resolve these to the extent of making them irrelevant. Like dregs in a teacup, they refuse to dissolve.

The problem with those who envisioned this end of history was that they conflated it with the triumph of liberal democracy. Fukuyama committed the same error, but most of those who point at his thesis miss out on the all too important last part of his message: that built into the very foundation of liberal democracy are the landmines that can, and will, blow it off. Yet this does not erase the first part of his message: that despite its failings, it can still render other political forms irrelevant, simply because, in his view, there is no alternative to free markets, constitutional republicanism, and the universal tenets of liberalism. There may be such a thing as civilisation, and it may well divide humanity. Such niceties, however, will sooner or later give way to the promise of globalisation and free trade.

It is no coincidence that the latter terms belong in the dictionary of neoliberal economists, since, as Kanishka Goonewardena has put it pithily, no one rejoiced at Fukuyama’s vision of the future of liberal democracy more than free market theorists. But could one have blamed them for thinking that competitive markets would coexist with a political system supposedly built on cooperation? To rephrase the question: could one have foreseen that in less than a decade of untrammelled deregulation, privatisation, and the like, the old forces of ethnicity and religious fundamentalism would return? Between the Berlin Wall and Srebrenica, barely three years had passed. How had the prophets of liberalism got it so wrong?

Liberalism traces its origins to the mid-19th century. It had the defect of being younger, much younger, than the forces of nationalism it had to fight and put up with. Fast-forward to the end of the 20th century, the breakup of the Soviet Union, and the shift in world order from bipolarity to multipolarity, and you had these two foes fighting each other again, only this time with the apologists of free markets to boot. This three-way encounter or Mexican standoff – between the nationalists, the liberal democrats, and the neoliberals – did not end up in favour of dyed-in-the-wool liberal democrats. Instead it ended up vindicating both the nationalists and the neoliberals. Why it did so must be examined here.

The fundamental issue with liberalism, which nationalism does not suffer from, is that it views humanity as one. Yet humanity is not one: man is man, but he is also rich, poor, more privileged, and less privileged. Even so, liberal ideals such as the rule of law, separation of powers, and judicial independence tend to believe in the equality of citizens.

So long as this assumption is limited to political theory, nothing wrong can come out of believing it. The problem starts when such theories are applied as economic doctrines. When judges rule in favour of welfare cuts or in favour of corporations over economically backward communities, for instance, the ideals of humanity no longer appear as universal as they once were; they appear more like William Blake’s “one law for the lion and ox.”

That disjuncture didn’t trouble the founders of European liberalism, be it Locke, Rousseau, or Montesquieu, because for all their rhetoric of individual freedoms and liberties they never pretended to be writing for anyone other than the bourgeoisie of their time. Indeed, John Stuart Mill, beloved by advocates of free markets in Sri Lanka today, bluntly observed that his theories did not apply to slaves or subjects of the colonies. To the extent that liberalism remained cut off from the “great unwashed” of humanity, then, it could thrive because it did not face the problem of reconciling different classes into one category. Put simply, humanity for 19th century liberals looked white, bourgeois, and European.

The tail-end of the 20th century could not have been more different to this state of affairs. I will not go into why so and how come, but I will say that between the liberal promise of all humanity merging as one, the nationalist dogma of everyone pitting against everyone else, and the neoliberal paradigm of competition and winner-takes-all, the winner could certainly not be ideologues who believed in the withering away of cultural differences and the coming together of humanity. As the century drew to a close, it became increasingly obvious that the winners would be the free market and the nationalist State. How exactly?

Here I like to propose an alternative reading of not just Fukuyama’s end of history and last man, but also the triumph of nationalism and neoliberalism over liberal democracy. In 1992 Benjamin Barber wrote an interesting if not controversial essay titled “Jihad vs. McWorld” to The Atlantic in which he argued that two principles governed the post-Cold War order, and of the two, narrow nationalism threatened globalisation. Andre Gunder Frank wrote a reply to Barber where he contended that, far from opposing one another, narrow nationalism, or tribalism, in fact resembled the forces of globalisation – free markets and free trade – in how they promoted the transfer of resources from the many to the few.

For Gunder Frank, the type of liberal democracy Barber championed remained limited to a narrow class, far too small to be inclusive and participatory. In that sense “McWorldisation”, or the spread of multinational capital to the most far-flung corners of the planet, would not lead to the disappearance of communal or cultural fragmentation, but would rather bolster and lay the groundwork for such fragmentation. Having polarised entire societies, especially those of the Global South, along class lines, McWorldisation becomes a breeding ground for the very “axial principle” Barber saw as its opposite: “Jihadism.”

Substitute neoliberalism for McWorldisation, nationalism for Jihadism, and you see how the triumph of one has not led to the defeat of the other. Ergo, my point: nationalism continues to thrive, not just because (as is conventionally assumed) liberal democracy vis-à-vis Francis Fukuyama failed, but more importantly because, in its own way, neoliberalism facilitated it. Be it Jihadism there or Jathika Chintanaya here, in the Third World of the 21st century, what should otherwise have been a contradiction between two forces opposed to each other has instead become a union of two opposites. Hegel’s thesis and antithesis have hence become a grand franken-synthesis, one which will govern the politics of this century for as long as neoliberalism survives, and for as long as nationalism thrives on it.

The writer can be reached at udakdev1@gmail.com

Sat Mag

Chitrasena: Traditional dance legacy perseveres

By Rochelle Palipane Gunaratne

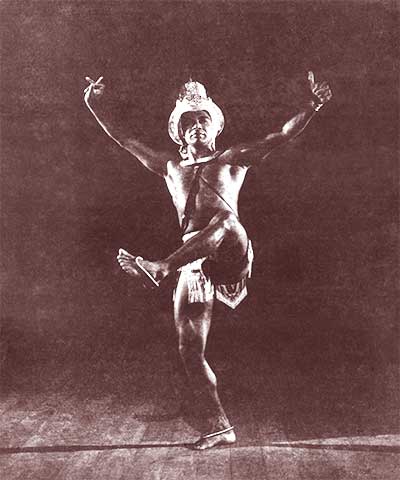

Where would Mother Lanka’s indigenous dance forms be, if not for the renaissance of traditional dance in the early 1940s? January 26, 2021 marked the 100th birth anniversary of the legendary Guru Chitrasena who played a pivotal role in reviving a dance form which was lying dormant, ushering in a brand new epoch to a traditional rhythmic movement that held sway for over two millennia.

“There was always an aura that drew us all to Seeya and we were mesmerized by it,” enthused Heshma, Artistic Director of the Chitrasena Dance Company and eldest grand-daughter of the doyen of dance. She reminisced about her legendary grandfather during a brief respite from working on a video depicting his devotion to a dance form that chose him.

“Most classical art forms require a lifetime of learning and dedication as it’s also a discipline which builds character and that is what we have been inculcated with by Guru Chitrasena, who also left us with an invaluable legacy,” emphasized Heshma, adding that it makes everything else pale in comparison and provides the momentum even when faced with trials.

Blazing a dynamic trail

The patriarch’s life and times resonated with an era of change in Ceylon, here was an island nation that was almost overshadowed by a gigantic peninsula whose influence had been colossal. Being colonized by the western empires meant a further suppression for over four centuries. Yet, hidden in the island’s folds were artistes, dancers and others who held on almost devoutly to their sacred doctrines. The time was ripe for the harvest and the need for change was almost palpable. To this era was born Chitrasena, who took the idea by its horns and led it all the way to the world stage.

The patriarch’s life and times resonated with an era of change in Ceylon, here was an island nation that was almost overshadowed by a gigantic peninsula whose influence had been colossal. Being colonized by the western empires meant a further suppression for over four centuries. Yet, hidden in the island’s folds were artistes, dancers and others who held on almost devoutly to their sacred doctrines. The time was ripe for the harvest and the need for change was almost palpable. To this era was born Chitrasena, who took the idea by its horns and led it all the way to the world stage.

He literally coaxed the hidden treasures of the island out of the Gurus of old whose birthrights were the traditional dance forms, who did not have a need or a desire for the stage. Their repertoire was relegated to village ceremonies, peraheras and ritual sacrifices. The nobles, at the time, entertained themselves sometimes watching these ‘devil dancers.’ In fact, some of these traditional dancers are said to have been taken as part of a ‘human circus’ act to be presented abroad in the late 1800s.

But how did Chitrasena change that thinking? He went in search of these traditional Gurus, lived with them, learned the traditions and then re-presented them as a respectable dance art on the stage. He revolutionized the manner in which we, colonized islanders, viewed what was endemic to us, suffice it to say he gave it the pride and honour it deserved, though it came with a supreme sacrifice, a lifetime of commitment to dancing, braving the criticism and other challenges that were constantly put up to deter him. Not only did he commit himself to this colossal task but the involvement of his immediate family and the family of dancers was exceptional, bordering on devotion as their lives revolved around dance alone.

Imbued in them is the desire to dance and share their knowledge with others and it is done through various means, such as giving prominence to Gurus of yore, hence the Guru Gedara Festival which saw the confluence of many artistes and connoisseurs who mingled at the Chitrasena Kalayathanaya in August 2018. Moreover the family has been heavily involved in inculcating a love for dancing in all age groups through various dance classes for over 75 years, specifically curated dance workshops, concerts and scholarships for students who are passionate about dancing.

While hardship is what strengthens our inner selves, there were questions posed by Chitrasena that we need to ask ourselves and the authorities concerning the arts and their development in our land. “Yes, there is a burgeoning interest in expanding infrastructure in many different fields as part of post war development. But what purpose will it serve if there are no artistes to perform in all the new theatres to be built for instance?” queries Heshma. The new theatres we have now are not even affordable to most of the local artistes. “When I refer to dance I am not referring to the cabaret versions of our traditional forms. I am talking about the dancers who want to immerse themselves in a manner that refuses to compromise their art for any reason at all, not to cater to the whims and fancies of popular trends, vulgarization for financial gain or simply diluting these sacred art forms to appeal to audiences who are ignorant about its value,” she concludes. There are still a few master artistes and some very talented young artistes, who care very deeply about our indigenous art forms, who need to be encouraged and supported to pursue their passion, which then will help preserve our rich cultural heritage. But the support for the arts is so minimal in our country that one wonders as to how their astute devotion will prevail in this unhinged world where instant fixes run rampant.

While hardship is what strengthens our inner selves, there were questions posed by Chitrasena that we need to ask ourselves and the authorities concerning the arts and their development in our land. “Yes, there is a burgeoning interest in expanding infrastructure in many different fields as part of post war development. But what purpose will it serve if there are no artistes to perform in all the new theatres to be built for instance?” queries Heshma. The new theatres we have now are not even affordable to most of the local artistes. “When I refer to dance I am not referring to the cabaret versions of our traditional forms. I am talking about the dancers who want to immerse themselves in a manner that refuses to compromise their art for any reason at all, not to cater to the whims and fancies of popular trends, vulgarization for financial gain or simply diluting these sacred art forms to appeal to audiences who are ignorant about its value,” she concludes. There are still a few master artistes and some very talented young artistes, who care very deeply about our indigenous art forms, who need to be encouraged and supported to pursue their passion, which then will help preserve our rich cultural heritage. But the support for the arts is so minimal in our country that one wonders as to how their astute devotion will prevail in this unhinged world where instant fixes run rampant.

Yet, the cry of the torchbearers of unpretentious traditional dance theatre in our land, is to provide it a respectable platform and the support it rightly deserves, and this is an important moment in time to ensure the survival of our dance. With this thought, one needs to pay homage to Chitrasena whose influence transcends cultures and metaphorical boundaries and binds the connoisseurs of dance and other art forms, leaving an indelible mark through the ages.

Amaratunga Arachchige Maurice Dias alias Chitrasena was born on 26 January 1921 at Waragoda, Kelaniya, in Sri Lanka. Simultaneously, in India, Tagore had established his academy, Santiniketan and his lectures on his visit to Sri Lanka in 1934 had inspired a revolutionary change in the outlook of many educated men and women. Tagore had stressed the need for a people to discover its own culture to be able to assimilate fruitfully the best of other cultures. Chitrasena was a schoolboy at the time, and his father Seebert Dias’ house had become a veritable cultural confluence frequented by the literary and artistic intelligentsia of the time.

In 1936, Chitrasena made his debut at the Regal Theatre at the age of 15 in the role of Siri Sangabo, the seeds of the first Sinhala ballet produced and directed by his father. Presented in Kandyan style, Chitrasena played the lead role, and this created a stir among the aficionados who noticed the boy’s talents. D.B. Jayatilake, who was Vice-Chairman of the Board of Ministers under the British Council Administration, Buddhist scholar, Founder and first President of the Colombo Y.M.B.A, freedom fighter, Leader of the State Council and Minister of Home Affairs, was a great source of encouragement to the young dancer.

In 1936, Chitrasena made his debut at the Regal Theatre at the age of 15 in the role of Siri Sangabo, the seeds of the first Sinhala ballet produced and directed by his father. Presented in Kandyan style, Chitrasena played the lead role, and this created a stir among the aficionados who noticed the boy’s talents. D.B. Jayatilake, who was Vice-Chairman of the Board of Ministers under the British Council Administration, Buddhist scholar, Founder and first President of the Colombo Y.M.B.A, freedom fighter, Leader of the State Council and Minister of Home Affairs, was a great source of encouragement to the young dancer.

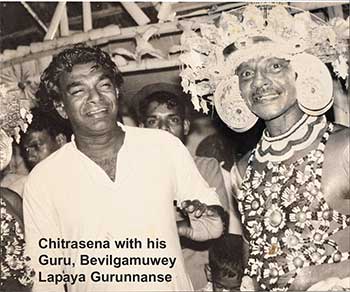

Chitrasena learnt the Kandyan dance from Algama Kiriganitha Gurunnanse, Muddanawe Appuwa Gurunnanse and Bevilgamuwe Lapaya Gurunnanse. Having mastered the traditional Kandyan dance, his ‘Ves Bandeema’, ceremony of graduation by placing the ‘Ves Thattuwa’ on the initiate’s head, followed by the ‘Kala-eliya’ mangallaya, took place in 1940. In the same year he proceeded to Travancore to study Kathakali dance at Sri Chitrodaya Natyakalalayam under Sri Gopinath, Court dancer in Travancore. He gave a command performance with Chandralekha (wife of portrait painter J.D.A. Perera) before the Maharaja and Maharani of Travancore at the Kowdiar Palace. He later studied Kathakali at the Kerala Kalamandalam.

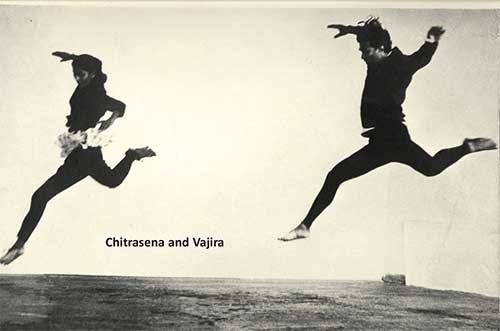

In 1941, Chitrasena performed at the Regal Theatre, one of the first dance recitals of its kind, before the Governor Sir Andrew Caldecott and Lady Caldecott with Chandralekha and her troupe. Chandralekha was one of the first women to break into the field of the Kandyan dance, followed by Chitrasenás protégé and soul mate, Vajira, who then became the first professional female dancer. Thereafter, Chitrasena and Vajira continued to captivate audiences worldwide with their dynamic performances which later included their children, Upeka, Anjalika and students. The matriarch, Vajira took on the reigns at a time when the duo was forced to physically separate with the loss of the house in Colpetty where they lived and worked for over 40 years. Daughter Upeka then continued to uphold the tradition, leading the dance company to all corners of the globe during a very difficult time in the country. At present, the grand-children Heshma, Umadanthi and Thaji interweave their unique talents and strengths to the legacy inspired by Guru Chitrasena.

Sat Mag

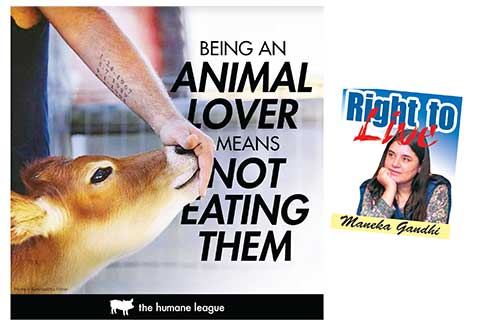

Meat by any other name is animal flesh

In India most animal welfare people are vegetarians. We, in People for Animals, insist on that. After all, you cannot want to look after animals and then eat them. But most meat eaters, whether they are animal people or not, have a hesitant relationship with the idea of killing animals for food. They enjoy the taste of meat, but shy away from making the connection that animals have been harmed grievously in the process.

This moral conflict is referred to, in psychological terms, as the ‘meat paradox’. A meat eater will eat caviar, but he will refuse to listen to someone telling him that this has been made from eggs gotten from slitting the stomach of a live pregnant fish. The carnivorous individual simply does not want to feel responsible for his actions. Meat eaters and sellers try and resolve this dilemma by adopting the strategy of mentally dissociating meat from its animal origins. For instance, ever since hordes of young people have started shunning meat, the meat companies and their allies in the government, and nutraceutical industry, have deliberately switched to calling it “protein”. This is an interesting manipulation of words and a last-ditch attempt to influence consumer behaviour.

For centuries meat has been a part of people’s diet in many cultures. Global meat eating rose hugely in the 20th century, caused by urbanization and developments in meat production technology. And, most importantly, the strategies used by the meat industry to dissociate the harming of animals from the flesh on the plate. Researchers say “These strategies can be direct and explicit, such as denial of animals’ pain, moral status, or intelligence, endorsement of a hierarchy in which humans are placed above non-human animals” (using religion and god to amplify the belief that animals were created solely for humans, and had no independent importance for the planet, except as food and products). The French are taught, for instance, that animals cannot think.

Added to this is the justification of meat consumption based on spurious nutritional grounds. Doctors and dieticians, who are unwitting tools of the “nutritional science” industry, put their stamp on this shameless hard sell.

The most important of all these strategies, and the one that has a profound effect on meat consumption, is the dissociation of meat from its animal origins. Important studies have been done on this (Kunst & Hohle, 2016; Rothgerber, 2013; Tian, Hilton & Becker, 2016; Foer, 2009; Joy, 2011; Singer, 1995). “At the core of the meat paradox is the experience of cognitive dissonance. Cognitive dissonance theory proposes that situations involving conflicting behaviours, beliefs or attitudes produce a state of mental discomfort (Festinger, 1957). If a person holds two conflicting, or inconsistent pieces of information, he feels uncomfortable. So, the mind strives for consistency between the two beliefs, and attempts are made to explain or rationalize them, reducing the discomfort. So, the person distorts his/her perception wilfully and changes his/her perception of the world.

The meat eater actively employs dissociation as a coping strategy to regulate his conscience, and simply stops associating meat with animals.

In earlier hunter-gatherer and agricultural societies, people killed or saw animals killed for their table. But from the mid-19th century the eater has been separated from the meat production unit. Singer (1995) says that getting meat from shops, or restaurants, is the last step of a gruesome process in which everything, but the finished product, is concealed. The process: the loading of animals into overcrowded trucks, the dragging into killing chambers, the killing, beheading, removing of skin, cleaning of blood, removal of intestines and cutting the meat into pieces, is all secret and the eater is left with neatly packed, ready-to-cook pieces with few reminders of the animal. No heads, bones, tails, feet. The industry manipulates the mind of the consumer so that he does not think of the once living and intelligent animal.

The language is changed concealing the animal. Pig becomes pork, sausage, ham, bacon, cows become beef and calves become veal, goat becomes mutton and hens become chicken and white meat. And now all of them have become protein.

Then come rituals and traditions which remove any kind of moral doubt. People often partake in rituals and traditions without reflecting on their rationale or consequences. Thanksgiving is turkey, Fridays is fish. In India all rituals were vegetarian. Now, many weddings serve meat. Animal sacrifice to the gods is part of this ritual.

Studies have found that people prefer, or actively choose, to buy and eat meat that does not remind them of the animal origins (Holm, 2018; Te Velde et al.,2002. But Evans and Miele (2012), who investigated consumers’ interactions with animal food products, show that the fast pace of food shopping, the presentation of animal foods, and the euphemisms used instead of the animal (e.g., pork, beef and mutton) reduced consumers’ ability to reflect upon the animal origins of the food they were buying. Kubberod et al. (2002) found that high school students had difficulty in connecting the animal origins of different meat products, suggesting that dissociation was deeply entrenched in their consuming habits. Simons et al. found that people differed in what they considered meat: while red meat and steak was seen as meat, more processed and white meat (like chicken nuggets e.g.) was sometimes not seen as meat at all, and was often not considered when participants in the study reported the frequency of their meat eating.

Kunst and Hohle (2016) demonstrated how the process of presenting and preparing meat, and deliberately turning it from animal to product, led to less disgust and empathy for the killed animal and higher intentions to eat meat. If the animal-meat link was made obvious – by displaying the lamb for instance, or putting the word cow instead of beef on the menu – the consumer avoided eating it and went for a vegetarian alternative. This is an important finding: by interrupting the mental dissociation, meat eating immediately went down. This explains how, during COVID, the pictures of the Chinese eating animals in Wuhan’s markets actually put off thousands of carnivores and meat sale went down. In experiments by Zickfeld et al. (2018) and Piazza et al. (2018) it was seen that showing the pictures of animals, especially young animals, reduce people’s willingness to eat meat.

Do gender differences exist when it comes to not thinking about the meat one eats?

In Kubberød and colleagues’ (2002) study on disgust and meat consumption, substantial differences emerged between females and males. Men were more aware of the origins of different types of meat, yet did not consider the origins when consuming it. Women reported that they did not want to associate the meat they ate with a living animal, and that reminders would make them uncomfortable and sometimes even unable to eat the meat. In a study by Bray et al. (2016), who investigated parents’ conversations with their children about the origins of meat, women were more likely than men to avoid these conversations with their children, as they felt more conflicted about eating meat themselves. In a study by Kupsala (2018) female consumers expressed more tension related to the thought of killing animals for food than men. The supermarket customer group preferred products that did not remind them of animal origins, and showed a strong motivation to avoid any clues that highlighted the meat-animal connection. What emerged was that the females felt that contact with, and personification of, food producing animals would sometimes make it impossible for them to eat animal products.

What are the other dissociation techniques that companies and societies use to make people eat meat. For men, the advertising is direct: Masculinity, the inevitable fate of animals, the generational traditions of their family. For women it is far more indirect: just simply hiding the source of the meat and giving the animal victim a cute name to prevent disgust and avoidance.

Kubberod et al. (2202) compared groups from rural and urban areas but found little evidence for differences between these groups. Moreover, both urban and rural consumers in the study agreed that meat packaging and presentation functioned to conceal the link between the meat and the once living animal. Both groups of respondents also stated that if pictures of tied up pigs, or pigs in stalls, would be presented on packaging of pork meat, or pictures of caged hens on egg cartons, they would not purchase the product in question.

Are people who are sensitive to disruptions of the dissociation process (or, in plain English, open to learning the truth about the lies they tell themselves) more likely to become vegetarians? Probably. Everyone has a conscience. The meat industry has tried to make you bury it. We, in the animal welfare world, should try to make it active again.

(To join the animal welfare movement contact gandhim@nic.in,www.peopleforanimalsindia.org)